Skatesonic – ISEA / IDEO / Villa Montalvo

4/7/2020

Skatesonic – ISEA / IDEO / Villa Montalvo

7 April 2020

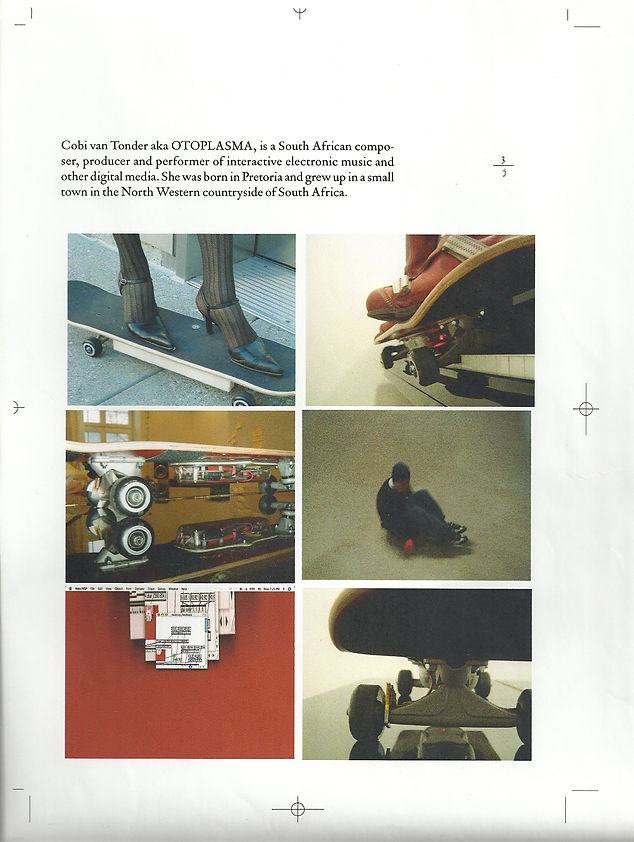

Skatesonic is a human / computer / skate / music interactive system. Movement data from a skateboard — including speed, tilt, distance off the ground, and sound from a microphone built into the board — registers different tricks and sends this data to a computer via Bluetooth, which then plays back skateboard-generated music.

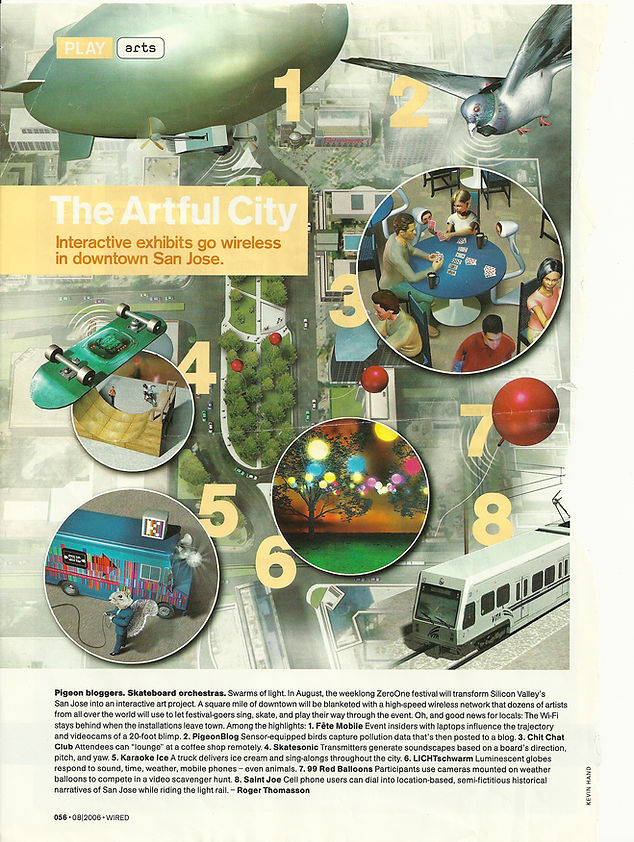

The music varies depending on the surface the skateboard rolls over and the different ways people ride. Multiple skateboards can be connected in unison, allowing a group of skateboarders to each play their own “instrument.” Skatesonic was created during a ZERO ONE / IDEO artist-in-residence program in San Jose, California, in collaboration with IDEO, Palo Alto. It was also performed at P.Arty in Seoul, Korea, in 2007.

Neal Stephenson’s 1992 novel Snow Crash was a major inspiration for Skatesonic. In particular, the character Y.T., the young skateboard courier who speeds through the city on smart wheels, led me to consider the translation of skateboard riding into code — and from code into music.

Snow Crash was itself influenced by Julian Jaynes’ book The Origin of Consciousness in the Breakdown of the Bicameral Mind. Jaynes proposed “a state in which cognitive functions were once divided between one part of the brain which appears to be ‘speaking’, and a second part which listens and obeys — a bicameral mind” (Wikipedia). The relationship between language and consciousness is explored deeply in this theory.

Where does music — as an abstract form of language — fit within this speculative spectrum of consciousness?

Jaynes theorised that a shift away from bicameralism marked the beginning of introspection and consciousness as we understand it today. Residual traces of the bicameral mind, according to Jaynes, include religion, hypnosis, possession, schizophrenia, and auditory hallucinations. More recent neuroimaging studies suggest right-hemisphere involvement in auditory hallucinations, lending further support to aspects of Jaynes’ neurological model (Wikipedia).

The plot of Snow Crash involves an attempt to return humans to their bicameral, pre-conscious state, unfolding within a virtual reality environment called the Metaverse, imagined as an urban landscape. I began to imagine how the music and soundtrack of Skatesonic might function as an abstract parallel reality — how a skater’s movements and contact with urban surfaces could be translated directly into sound.

Skatesonic was featured by Makezine:

http://makezine.com/2008/12/06/skate-sonic-skateboarding/

As a human–computer interface, Skatesonic raises speculative questions: could we someday navigate virtual landscapes by controlling software with skateboards rather than fingers on a keyboard or hand on a mouse? Could skaters improve their skills through sonic feedback — learning through sound as much as through vision or touch?